About

The Laboratory of Computer Graphics and Virtual Environments (CG&VE) is equipped not only to face new Challenges in areas such as Immersive Environments, Digital Games and User Experience, but also to broaden the knowledge in Computer Graphics, in areas such as Image Rendering and Visualisation.

The research team is composed of approximately 20 PhD senior researchers and also post-doc and PhD candidate research fellows who conduct their research at CSIG.

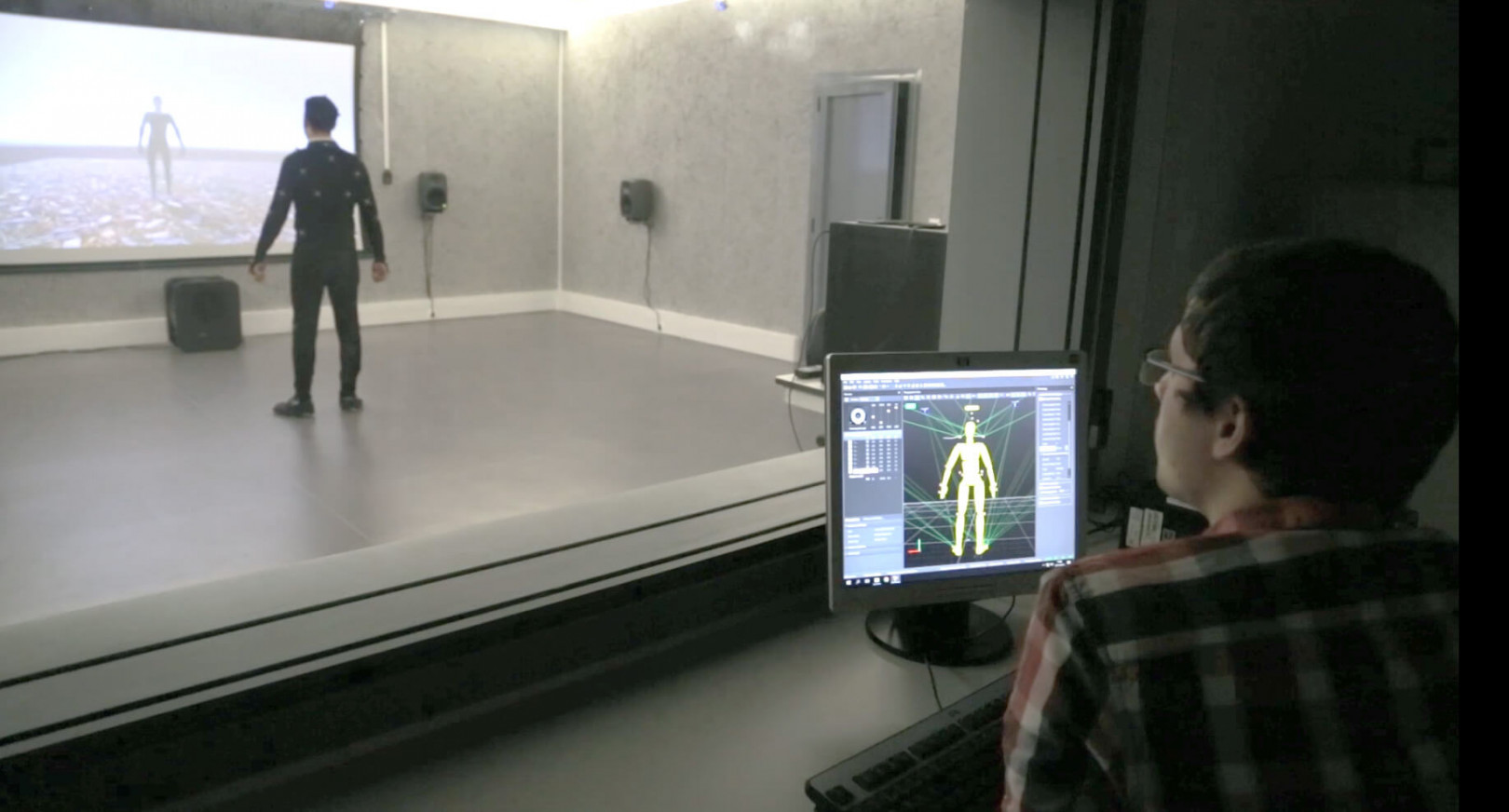

Initially created in Porto in 1985, the Laboratory of Computer Graphics and Virtual Environments has nowadays a new state-of-the-art laboratory in Vila Real ‑ the MASSIVE (Multimodal Acknowledgeable multiSenSory Immersive Virtual Environments) Laboratory.

Location: FEUP Campus, Porto, and UTAD Campus, Vila Real